Eduardo Aguilar Pelaez

on 2 April 2020

This is part of a blog series on the impact that 5G and GPUs at the edge will have on the roll out of new AI solutions. You can read the other posts here.

Recap

In part 1 we talked about the industrial applications and benefits that 5G and fast compute at the edge will bring to AI products. In part 2 we went deeper into how you can benefit from this new opportunity. In this part we will focus on the key technical barriers that 5G and Edge compute remove for AI applications.

Model training

With deep neural networks, the rule of thumb is that the more training data (real or from simulations) the better the results will be, you see this for yourself here. Processing more data costs more money if running on rented servers such as the public cloud.

This is incentivising many companies to set up their own data center to carry out the model training on their own servers. This used to require a lot of human resources but it is becoming simpler every day with tools such as MAAS to provision servers quickly.

Data transfer

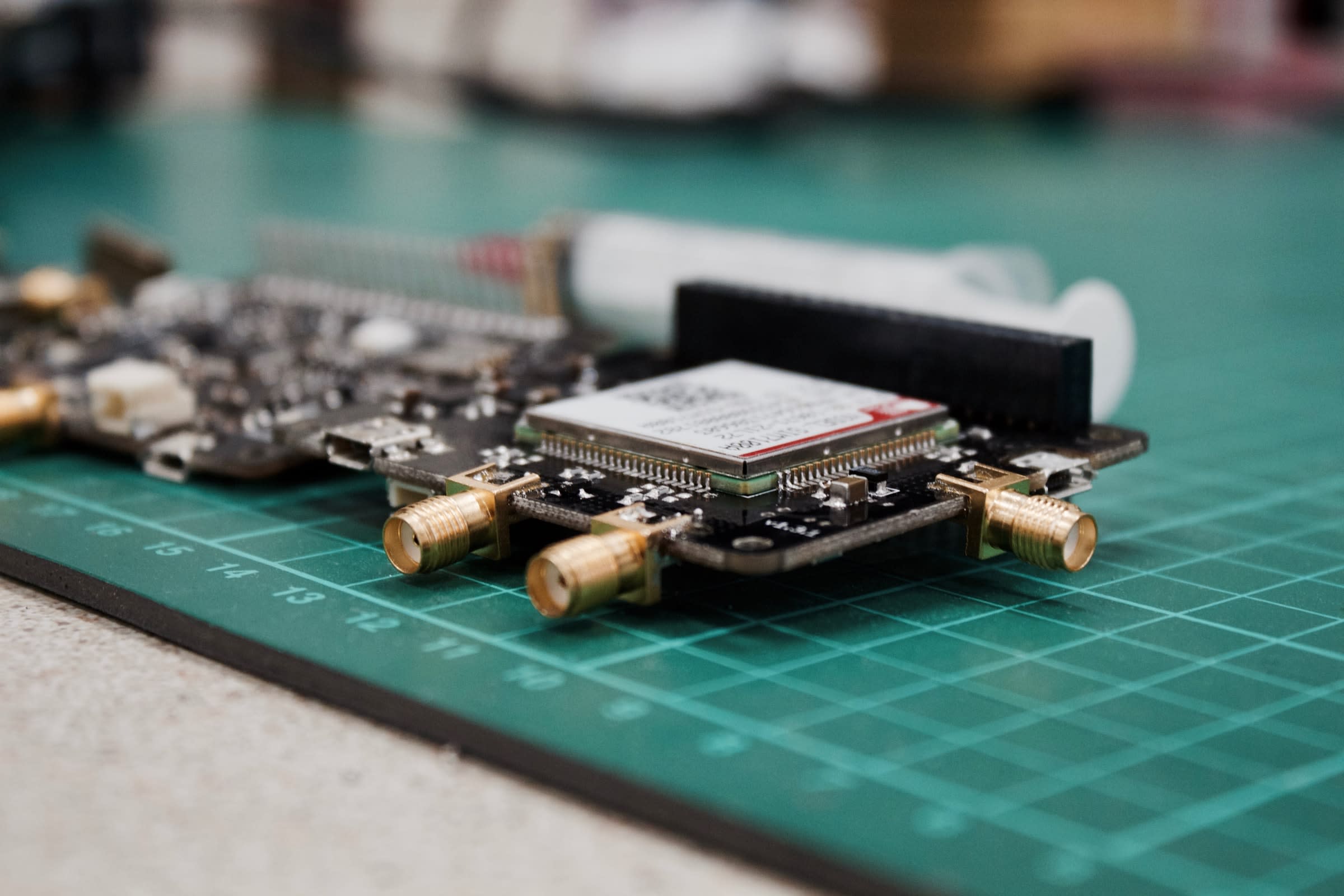

Transferring large amounts of data from sensors to servers can be costly and complex so a pattern is emerging whereby companies bring the compute servers physically closer to the data sources.

One example of this is the installation of Edge GPU servers near traffic cameras to

- Process

- Record and

- Store

video of urban traffic.

Implementation of AI/ML operations

When planning to deliver a great AI product, the following system requirements are critical to ensure that reliable, timely and secure ML operations will be possible:

- Model size after training, i.e. will the trained model fit on the target device where inference will run? If not, could this computation be offloaded?

- Response latency, i.e. will the trained model compute fast enough for the product to work as intended, e.g. fast speech recognition. Alternatively in the offloaded compute configuration, will the response time of the offload compute be fast enough? (accounting for both connectivity and compute latency).

- Update delivery mechanisms, i.e. how will the software and AI models on the device or co-located compute be updated and patched to be kept secure?

Although the last few years have seen very interesting work in the field of trained model size minimisation with tools such as TensorFlow Lite , model response latency remains an issue for more data and compute heavy applications due to compute limitations on the IoT device or robot.

In such cases, an alternative to running the AI model on the device is to offload this particular task to a nearby server.

Co-location and 5G connectivity

Co-location of sensors, servers and actuators provides the following two key technical benefits that make new solutions possible:

- High bandwidth connectivity between the data sources and the servers, and

- Low latency between the IoT device and the co-located compute.

For example we could imagine a situation where a street camera;

- Could trigger a traffic light to go ‘red’ if an accident is detected. Sensing, perceiving and acting very quickly.

This could be achieved within a few milliseconds with a video processing Ai model running on an Edge GPU nearby connected to the camera via 5G.

In traditional settings, only wired connections would have been able to realistically provide the benefits listed above. 5G will soon do this reliably everywhere at an affordable price.

Cost and latency minimisation with Computation offloading

To date, the computational capability of IoT systems has primarily been limited by the amount of money customers were willing to spend.

Large ticket items such as a Tesla electric car can absorb the cost of a powerful compute board, however cheaper devices for more pervasive applications such as mobile phones, smart speakers or connected cameras have much stricter compute constraints.

High speed and low latency connectivity such as 5G networks can accelerate the adoption of co-located Edge GPU servers as a solution to hardware constrained IoT devices and robots.